This is where I put interesting bits of source so I can find them again. Maybe they will be useful to you. Don’t expect consistency, documentation, bike messengers, or aspiring actors.

Guest Post: “Grumpy Sister” on Applying for Software Jobs

My sister gives some good advice:

Resume: Resumes are ONE PAGE long. (Plus a page for publications if you have them.) Nothing makes me grumpier than a new graduate with a three page resume. I do not care what you did in high school. I do not care about your ambitions for your life or your job search or your cat. I do not care to read paragraphs of text. If you include coursework (which you should only do if you’re applying right out of undergrad), only include unusual and high level courses. If you’re applying for a job in software, I should hope you’ve had Intro to CS.

Some places will ask you to do a presentation. If they do, YOU SHOULD ACE THE PRESENTATION. The entire rest of the day is going to be people asking you questions that may or may not be in your area of expertise. The only part of the interview you control is the presentation. There is simply no excuse for this not to be outstanding. Specifically:

- Practice it. You probably will be given a time limit and it will probably be short. You will not get through twenty minutes worth of content if you have to stop and think and um and er your way through every slide. Plus, if you’ve practiced enough, you’ll have some inflection to your voice and you’ll have some leisure to look around and smile and make eye contact instead of frantically trying to remember your next slide. If you can find a friend and make them sit through it ahead of time, even better.

- Words are bad. Pictures are good. Videos are great.

- Make sure your graphics are readable. If you put in a graph, the lines need to be thick enough to be seen, the axes should be labeled, and it should have a legend if it needs one. Also make sure you actually know what your graph is of (you think I’m kidding? I’ve been in not one but two presentations in which the candidate couldn’t remember what his graphs were).

- If you put math on the slide, define your variables. Please. Engineers can’t even agree on the correct letter for the square root of -1.

- Everyone likes to see results. Especially results that are videos.

- Be prepared for questions in the middle of the presentation.

- Present one thing that you know very well. Within that, pick one aspect you want to go into depth on and teach it. Do not just present a slide of math because you learned how to use beamer in grad school and think it makes you look smart – it just makes you look like a poor communicator. If you have multiple things about which you think you could give an excellent presentation by all means pick the one you think will be most interesting to your interviewers. But that’s a concern secondary to being able to explain it in your sleep.

- Don’t include a biography slide. We’ve all read your resume. Similarly, don’t waste five minutes telling me about the projects you’ve done that you’re not going to talk about today. If you think people might ask about them, make some backup slides.

Interviews: Almost any software interview is going to make you do whiteboard interviews. Review your favorite algorithms textbook (I recommend CLRS if you don’t have a favorite), find some example google interview problems online, and practice coding on a whiteboard. If you don’t have a whiteboard, tack some paper to the wall and use that. It’s really different from coding on a computer and it’s really important to get used to. In my very first interview ever, they asked me to code binary search and I messed it up. I’d actually been a TA for algorithms… and I got flustered with the different feel of the whiteboard and the interview and I made mistakes.

07/16/18

Sequencing DNA in our Extra Bedroom

MinION with flow-cell. The sensor is in the small window near the “SpotON” port. Video explanation of the principal of operation.

About a year ago, my girlfriend says, “I think we can sequence DNA in our extra room.” She showed me the website of Oxford Nanopore, which makes a USB DNA sequencer. We joked about it for a bit and that was that.

Until October, when I decided to buy her one for Christmas. I punched my credit card into their nice online store and waited for my shipping confirmation, which never arrived. Finally, I emailed them and found out I needed to join their “community.” At this point, I should mention that I’m not a biologist and I’m certainly not qualified to join a community of researchers. And they wanted to have a phone call. And this thing had better arrive by Dec. 25.

So I fessed up to my girlfriend (who has a PhD in genetics) and she wrote me a notecard with exactly what to say. I studied up. The day comes and I’m all nervous. The rep, I’ll call her Alice, phones and I proudly explain all the things I’m going to do, straight off my notecard.

Then Alice starts asking questions. I was not prepared for questions. Do I have a temperature controlled fridge? Duh, of course (in the kitchen)1 A freezer? Yes obviously2. A centrifuge and thermocycler? “Uh sure yeah I have that3.” Then Alice started asking questions about my research. Uh oh. I repeat something about bacterial colonies from my card but she isn’t buying it. I manage to get out that I understand this isn’t a spit-in-the-tube-and-done thing and that’s all I’ve got. She keeps pushing and I eventually admit that I’m really buying it for my girlfriend for Christmas. Apparently that’s okay, since once Alice finishes laughing she said that it will arrive by Dec. 25 but they don’t offer a gift-wrap service.

The box arrives, packed in some sweet dry ice stuff. Dec. 25 comes and I get a set of pipettes and a PCR machine.

The obvious thing to sequence is one of us but the MinION can only do 1-2 billion bases per run4; to have decent quality for a human you need more like 90 billion 5. We decide to swab our mouths before brushing our teeth and find out what’s in there. My girlfriend whips up some media, we plop our swabs in, and put the tubes in the $30 chicken egg incubator she found on Amazon. Two days later I’m informed that the cultures smell like “really really bad breath,” and that I “really ought to smell them for myself,” which I studiously avoid for the next two months.

With the help of a $80 genomic DNA extraction kit, we cut up the cells, filter all their bits out, and have genomic DNA ready to go. First we run a gel electrophoresis to prove to ourselves that we didn’t mess up the extraction too much.

At this step, all the real biologists out there are assuming I’m going to talk about quantifying the DNA to make sure we had the right concentration before blowing a $500 flow-cell (the consumable part of the MinION) on this. Yeah, that would be a lot of work and the line is pretty bright in the gel…

We open the fridge to get out the sequencer’s flow-cell and notice that everything is frozen. Oh %$@*#@#*. I set the fridge to “10” because that seemed like a good idea. Alice definitely isn’t going to buy my warranty-return story. Two days later we’ve got a new thermometer and are praying that 100 freeze-thaw cycles are, uh, totally fine.

Happily the MinION comes with a calibration program that doesn’t seem to notice our substandard storage: all green. At this point we discover that Oxford Nanopore helpfully sends everyone a set of sample DNA to run first. We decide that sounds like a really good idea.

The promotional videos for the MinION claim, “simple 10 minute setup.” About two hours later, we’ve done the library prep, and we’re pipetting into the device. There are lots of warnings on their webpage about how you really can’t let air into the thing (permanent destruction of the flow-cell, blah blah). So of course the first thing we do is introduce an air bubble. But it only covers half the sensor. I think it’s the most expensive 5µL of air I’ve ever seen.

It turns out the sequencer produces so much data the minimum requirements are a 1TB SSD and a quad-core CPU. My girlfriend’s laptop has 200 GB and a dual core, so that will have to suffice. We fire it up and it starts producing reads. We’re over the moon. 6-hours later the run finishes, but only 10% of the bases have been “called.” The way the system works is by reading tiny changes in electric current as the molecules pass through the nanopore. Apparently the signal processing is kind of hard because 2 days later it’s still going. I play Overwatch by myself.

Sequencing. You can see the 512 ports on the laptop screen. Approximately half are green (sequencing or waiting for DNA) and the other half are blue (air-bubbled).

The Oxford Nanopore website supports automated uploading and analysis of some datasets, including the calibration run. Our run produced 983 million (aligned) base pairs, which any professionals reading this are scoffing at, but I’m pretty sure Celera circa 2000 would have been impressed. I certainly am. The last time I did anything close to this, we tested PTC tasting with a gel electrophoresis that took all day and effectively sequenced 1 base. We prep and load our mouth-bacteria genomic DNA into the sequencer. We’re reusing the flow-cell to save money and it’s hard to load correctly. We’re all paranoid about air bubbles now, but there’s a ton of them in the little fluid pipes. Eventually we look at each other, shrug, and put the sample in. It goes nowhere.

The library prep includes adding “loading beads” to the sample which give the liquid a ghostly white color. You can see if your sample is on the sensor by tracking the movement of that color, and ours clearly was stuck on the top of the port. Eventually we searched the forums and found someone else incompetent enough to have the same problem, with a solution that can be summarized as, “pull some liquid out of a different port and hope.” It worked great.

The sequencer uploads data in realtime, so after about 20 minutes, we were looking at a report of what lives in our mouth. Good news: it’s all normal. Bad news: ew. Turns out that we’re hosting viruses that are preying on the bacteria we’re also hosting.

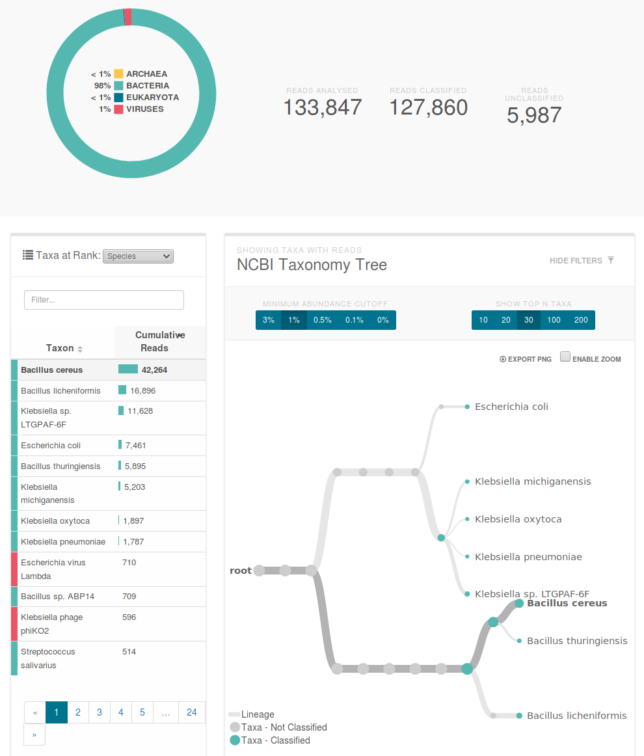

Classification of our data. The primary species are types of bacillus and klebsiella. You can see a klebsiella phage which is a virus that preys on the similarly named bacteria.

I can’t wait for the next time I get sick so I can confidently stride into the doctor’s office and inform them exactly what bacterial infection I have before throwing up on their table and finding out we massively contaminated the sample and I have a viral flu.

04/13/18

Getting into Graduate School (Engineering / CS)

These are my personal opinions. Other people will say other things.

Fellowships:

You must apply for fellowships to be competitive. It actually doesn’t really matter if you get one because you find out after you are admitted to the schools. But you need to be able to say on your application that you applied (they specifically ask). If the school thinks you might win one then they will be more likely to admit you because they won’t have to pay for you. The NSF deadline is /earlier than you think/. It is usually late October or early November… which means you need to ask for letters of recommendation in late September or early October.

You should at least apply to:

National Science Foundation Graduate Research Fellowship Program (NSF GRFP)

National Defense Science and Engineering Graduate (NDSEG)

and probably to:

DOE Office of Science Graduate Fellowship Program

DOE Computational Science Graduate Fellowship Program

When I was doing this as an undergraduate, it took me 4 to 6 hours per paper to read it, understand it, and come up with a question.

One thing that I found worthwhile was to read some of the sites out there on getting into grad school. I liked:

http://jxyzabc.blogspot.com/2008/08/cs-grad-school-part-1-deciding-to-apply.html

http://science-professor.blogspot.com/2010/02/grad-interviews.html

http://science-professor.blogs

11/1/16

Weird resolution video to Mac

avconv -i 2015-10-08.10-3dvisualization.avi -b 100000k -vcodec qtrle 2015-10-08.10-3dvisualization.mov

11/6/15

Convert 120fps MP4 to 30fps AVI

avconv -i autonomous-takeoff-from-launcher.mp4 -vf fps=fps=30 -b 100000k autonomous-takeoff-from-launcher.avi

09/10/15

AviSynth + VirtualDub + Linux + GoPro Hero 4 Black 120fps video

Editing 120 fps GoPro Hero 4 Black 1080p video without video conversion.

- Install AviSynth and VirtualDub for Linux

- Make sure you are using a recent version of VirtualDub (>= 1.10).

- Install the FFMpegSource plugin by downloading it (version 2.20-icl only) and placing all of it’s files from:

ffm2-2.20-icl/x86

in your

~/.wine/drive_c/Program Files (x86)/AviSynth 2.5/plugins

directory.

- Finally, open your MP4 file in your .avs:

a = FFAudioSource("GOPR0002.MP4") v = FFVideoSource("GOPR0002.MP4") v = AudioDub(v, a) - (Optional, allows VirtualDub to open MP4 files directly)

Download FFInputDriver and unpack it intoVirtualDub-1.10.4/plugins32.

- Note: this is important because the AviSynth plugin seems to fail when loading huge files. Use this to open your source in VirtualDub and then trim to the relevant part.

-

I’ve also been using the avisynth GUI proxy with wine along with Avidemux (in apt-get as avidemux) to improve load times on Linux.

- File > Connect to avsproxy in Avidemux

04/3/15

view images in order using feh

feh `ls -v *.png`

10/16/14

Fitting nonlinear (small aircraft) models from experimental data

My labmate Ani Majumdar wrote up some useful notes from fitting models for our experimental data (bolded text is mine). See also Table 3.1 from my thesis:

I made progress on this. The model seems quite good now (comparing simulations using matab’s sysid toolbox for experimental flight trials, and looking at tracking performance on some preliminary experiments). Here are the things I tried in chronological order (and some lessons I learned along the way):

(1) Get parametric model from textbook (Aircraft Control and Simulation [Stevens], and Flight Dynamics [Stengel]), then do physical experiments on the plane to determine the parameters, and hope that F = ma.

The following parameters had to be measured/estimated:

– Physical dimensions (mass, moments of inertia, wing span/area, rudder/elevator dimensions, etc…)

– These are easy to just measure directly– Relationship between servo command (0-255) and deflection angle of control surfaces

– This is simple to measure with a digital inclinometer/protractor (the reason this is not trivial is that the servo deflection gets transmitted through the wires to the actual control surface deflection.. so you actually do have to measure it)– Relationship between throttle command and airspeed over wings

– I measured this using a hot-wire anemometer placed above the wings for different values of throttle commands (0-255). The relationship looks roughly like airspeed = sqrt(c*throttle_command), which is consistent with theory.– Relationship between throttle command and airspeed over elevator/rudder

– Same experiment as above (the actual airspeed is different though).– Relationship between throttle command and thrust produced

– I put the plane on a low-friction track and used a digital force-meter (really a digital scale) to measure the thrust for different throttle commands. The plane pulls on the force-meter and you can read out the force values. This is a scary experiment because there’s the constant danger of the plane getting loose and flying off the track! You also have to account for static friction. You can either just look at the value of predicted thrust at 0 and just subtract this off, or you can also tilt the track to see when the plane starts to slide (this can be used to compute the force: m*g*sin(theta)). In my case, these were very close. The relationship was linear, but the thrust saturates at around a throttle command of 140.– Aerodynamic parameters (e.g. lift/drag coefficients, damping terms, moment induced by angle derivatives).

– I could have put the plane in a wind-tunnel for some of these, but decided not to. I ended up using a flat plate model.(2) Use matlab’s sysid toolbox to fit stuff

Approach (1) wasn’t giving me particularly good results. So, I tried just fitting everything with matlab’s sysid toolbox (prediction error minimization, pem.m). I collected a bunch of experimental flight trials with sinusoid-like inputs (open-loop, of course).

This didn’t work too well either.

(3) Account for delay.

Finally, I remembered that Tim and Andy noticed a delay of about 50 ms when they were doing their early prophang experiments (they tried to determine this with some physical experiments). So, I took the inputs from my experimental trials and shifted the control input tapes by around 50 ms (actually 57 ms).

When I used matlab’s sysid toolbox to fit parameters after shifting the control input commands to account for delay, the fits were extremely good!

I noticed this when I was fitting a linear model to do LQR for prophang, back in October 2011. The fits are not good if you don’t take delay into account (duh). Got to remember this the next time.

Here is a summary of what worked, and how I would go about doing it if I had to do it again (which I probably will have to at some point on the new hardware):

(1) Do some physical experiments to determine the stuff that is easy to determine. And to get the parameteric form of the dependences (e.g. thrust is linear with the 0-255 prop command, and it saturates around 140).

(2) Use matlab’s sysid toolbox to fit parameters with bounds on the parameters after having accounted for delay. The bounds are important. If some of your outputs are not excited (e.g. yaw on our plane and pitch to some degree), pem will make your parameters aphysical (e.g. extremely large) in order to eke out a small amount of prediction performance. As an extreme example, let’s say you have a system:

xdot = f(x) + c1*u1 + c2*u2.

Let’s say all the control inputs you used for sysid had very small u2 (let’s say 0 just for the sake of arguments). Then, any value of c2 is consistent with the data.. so you can just make c2 = 10^6 for example, which is physically meaningless. If u2 is small (but non-zero) and is overshadowed by the effect that u1 has, then some non-physical values of c2 could lead to very slight improvements in prediction errors and could be preferred over physical values.

So, bounding parameters to keep them physically meaningful is a good idea in my experience. Of course, ideally you would just collect enough data that this is not a problem, but this can be hard (especially for us since the experimental arena is quite confined).

Another good thing to do is to make sure you can overfit with the model you have. This sounds stupid, but is actually really important. If the parametric model you have is incorrect (or if you didn’t account for delay), then your model is incapable of explaining even small amounts of data. So, as a sanity check, take just a small piece of data and see if you can fit parameters to explain that piece of data perfectly. If you can’t, something is wrong. I was seeing this before I accounted for delay and this tipped me off that I was missing something fundamental. (it took me a little while longer to realize that it was delay :). I also tried this on the Acrobot on the first day I tried to use Elena’s model to do sysid with. Something was off again (in this case, it was a sign error – my fault, not hers).

(3) Finally, account for delay when you run the controller by predicting the state forwards in time to compute the control input. This works well for Joe, and seems to be working well for me so far.

09/24/14

Converting between AprilCal and OpenCV

I recently wanted to use AprilCal from the April Robotics Toolkit‘s camera suite for camera calibration but to write my code in OpenCV. I got a bit stuck converting between formats so Andrew Richardson helped me out.

1) Run AprilCal’s calibration.

2) Enter the GUI’s command line mode and export to a bunch of different model formats with

3) Find the file for CaltechCalibration,kclength=3.config which orders the distortion paramters like this: radial_1, radial_2, tangential_1, tangential_2, radial_3.

4) Your OpenCV camera matrix is:

[ fc[0] 0 cc[0] ]

M = [ 0 fc[1] cc[1] ]

[ 0 0 1 ]

5) Your OpenCV distortion vector is:

kc[0]

kc[1]

D = lc[0]

lc[1]

kc[2]

08/13/14

Mount multiple partitions in a disk (dd) image

sudo kpartx -av disk_image.img

06/20/14

Debugging cron

The way to log cronjobs:

* * * * * /home/abarry/mycommand 2>&1 | /usr/bin/logger -t my command name ... command runs ... cat /var/log/syslog

Check for missing environment variables. Use env in cron vs. env in your shell.

06/11/14

Force a CPU frequency on an odroid (running linaro 3.0.x kernel)

Update: This works on 3.8.x kernels too. I used MIN_SPEED and MAX_SPEED of 1704000 instead.

For the 3.8.x kernels —

CPU speed file:

/sys/devices/system/cpu/cpu0/cpufreq/scaling_cur_freq

Temperature file:

/sys/class/thermal/thermal_zone0/temp

From here: http://forum.odroid.com/viewtopic.php?f=65&t=2795

sudo apt-get install cpufrequtils

Create a file: /etc/default/cpufrequtils with these contents:

ENABLE="true" GOVERNOR="performance" MAX_SPEED=1700000 MIN_SPEED=1700000

Note:If your CPU temperature hits 85C, this will be overridden to force it down to 800Mhz. Check with this script:

Burn CPU:

while true

do

sleep .5

cpufreq-info |grep "current CPU"

sudo cat /sys/devices/platform/tmu/temperature

done

06/3/14

OpenCV and saving grayscale (CV_8UC1) videos

OpenCV does some very odd things when saving grayscale videos. Specifically, it appears to covert them to RGB / BGR even if you have saving in a grayscale codec like Y800. This stack overflow post confirms, as does opening the files in a hex editor.

The real issue is that this conversion is lossy. When saving a grayscale image with pixel values of less than 10, they are converted to 0! Yikes!

The only reasonable solution I have found is to save all my movies at directories full of PGM files (similar to PPM but only grayscale).

04/26/14

Move windows between monitors using a hotkey in xfce

Also should work in other window managers.

Install xdotool

sudo apt-get install xdotool

Figure out the geometry of the destination by moving a terminal to the target location and size and running:

xfce4-terminal --hide-borders xdotool getactivewindow getwindowgeometry

Giving for example,

Window 102778681 Position: 2560,1119 (screen: 0) Geometry: 1050x1633

Sometimes terminals size themselves oddly, so you can do this instead:

ID=`xdotool search firefox`

And then use the ID:

xdotool getwindowgeometry $ID

There’s also an issue with the window decorations, so you’ll have to correct for that. Mine were 22 pixels tall.

Finally setup a hotkey with:

xdotool getactivewindow windowmove 2560 1119 windowsize 1050 1633

My hotkeys:

xdotool getactivewindow windowmove 440 0 windowsize 1680 1028 # top monitor xdotool getactivewindow windowmove 0 1050 windowsize 1277 1549 # left on middle monitor xdotool getactivewindow windowmove 1280 1050 windowsize 1277 1549 # right on middle monitor xdotool getactivewindow windowmove 2560 1075 windowsize 1050 1633 # right monitor

03/6/14

Useful AviSynth Functions

I wrote a few useful AviSynth functions for:

- Automatically integrating videos from different cameras: ConvertFormat(…)

import("abarryAviSynthFunctions.avs")

goproFormat = AviSource("GOPR0099.avi")

framerate = goproFormat.framerate

audiorate = goproFormat.AudioRate

width = goproFormat.width

height = goproFormat.height

othervid = AviSource("othervid.avi")

othervid = ConvertFormat(othervid, width, height, framerate, audiorate)

vid1 = AviSource("GOPR0098.avi")

# trim to the point that you want

vid1 = Trim(vid1, 2484, 2742)

# make frames 123-190 slow-mo, maximum slow-mo factor is 0.25, use 15 frames to transition from 1x to 0.25x.

vid1 = TransitionSlowMo(vid1, 123, 190, 0.25, 15)

03/5/14

“Input not supported,” blank screen, or monitor crash when using HDMI to DVI adapter

You’re just going along and all of a sudden a terminal bell or something causes your monitor to freak out and crash. Restarting the monitor sometimes fixes the problem.

Turns out that sound is coming out through the HDMI adapter which your monitor thinks is video, and then everything breaks. Mute your sound.

12/9/13

Popping / clipping / bad sound on Odroid-U2

Likely your pulseaudio configuration is messed up. Make sure that pulseaudio is running / working.

12/9/13

The (New) Complete Guide to Embedded Videos in Beamer under Linux

We used to use a pdf/flashvideo trick. It was terrible. This is so. much. better:

Update: pdfpc is now at a recent version in apt.

1) Install

sudo apt-get install pdf-presenter-console

2) Test it with my example: [on github] [local copy]

# use -w to run in windowed mode pdfpc -w video_example.pdf

3) You need a poster image for every movie. Here’s my script to automatically generate all images in the “videos” directory (give it as its only argument the path containing a “videos” directory that it should search.) Only runs on *.avi files, but that’s a choice, not a avconv limitation.

#!/bin/bash

for file in `find $1/videos/ -type f -name "*.avi"`; do

#for file in `find $1/videos/ -type f -`; do

# check to see if a poster already exists

if [ ! -e "${file/.avi}.jpg" ]

then

# make a poster

#echo $file

avconv -i $file -vframes 1 -an -f image2 -y ${file/.avi/}.jpg

fi

done

4) Now include your movies in your .tex. I use an extra style file that makes this easy: extrabeamercmds.sty (github repo). Include that (\usepackage{extrabeamercmds} with it in the same directory as your .tex) and then:

\fullFrameMovieAvi{videos/myvideo}

or for a non-avi / other poster file:

\fullFrameMovie{videos/myvideo.avi}{videos/myvideo.jpg}

If you want to include the movie yourself, here’s the code:

\href{run:myvideo.avi?autostart&loop}{\includegraphics[width=\paperwidth,height=0.5625\paperwidth]{myposter.jpg}}

Installing from source:

1) Install dependencies:

sudo apt-get install cmake libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev libgee-0.8-dev librsvg2-dev libpoppler-glib-dev libgtk2.0-dev libgtk-3-dev gstreamer1.0-*

Install new version of valac:

sudo add-apt-repository ppa:vala-team sudo apt-get update sudo apt-get install valac-0.30

2) Download pdfpc:

git clone https://github.com/pdfpc/pdfpc.git

3) Build it:

cd pdfpc mkdir build cd build cmake ../ make -j8 sudo make install

Thanks to Jenny for her help on this one!

12/2/13

AviSynth: Add a banner that 1) wipes across to the right and 2) fades in

From Jenny.

Prototype:

function addWipedOverlay(clip c, clip overlay, int x, int y, int frames, int width)

Arguments:

c: the video clip you want to overlay

overlay: your banner

x: x position in image for banner to slide across from

y: y position in image

frames: the number of frames in which to accomplish wiping and fading in

width: the width over the overlay banner (if this is too big you will get an error from crop about destination width less than zero)

Notes:

Assumes a transparency channel. To get this, you need to load your image with the pixel_type=”RGB32″ flag.

Example:

img = ImageSource("BannerName.png", pixel_type="RGB32")

clip1 = addWipedOverlay(clip1, img, 0, 875, 30, 1279, 0)

This is actually two functions because it uses recursion to implement a for loop:

function addWipedOverlay(clip c, clip overlay, int x, int y, int frames, int width)

{

return addWipedOverlayRecur(c, overlay, x, y, frames, width, 0)

}

function addWipedOverlayRecur(clip c, clip overlay, int x, int y, int frames, int width, int iteration)

{

cropped_overlay = crop(overlay, int((1.0 - 1.0 / frames * iteration) * width), 0, 0, 0)

return (iteration == frames)

\ ? Overlay(Trim(c, frames, 0), overlay, x = x, y = y, mask=overlay.ShowAlpha)

\ : Trim(Overlay(c, cropped_overlay, x = x, y = y, mask = cropped_overlay.ShowAlpha, opacity = 1.0 / frames * iteration), iteration, (iteration == 0) ? -1 : iteration) + addWipedOverlayRecur(c, overlay, x, y, frames, width, iteration + 1)

}

11/30/13

Convert rawvideo / Y800 / gray to something AviSynth can read

avconv -i in.avi -vcodec mpeg4 -b 100000k out.avi

or in parallel:

find ./ -maxdepth 1 -name "*.avi" -type f | xargs -I@@ -P 8 -n 1 bash -c "filename=@@; avconv -i \$filename -vcodec mpeg4 -b 100000k \${filename/.avi/}-mpeg.avi"

11/18/13