This is where I put interesting bits of source so I can find them again. Maybe they will be useful to you. Don’t expect consistency, documentation, bike messengers, or aspiring actors.

KDE setup

sudo apt install plasma-nm # for network manager applet

sudo apt install kde-standard # for quicklaunch

Better alt-tab:

Go to System Settings > Window Management > Task Switcher; Click on “Get New Task Switchers…” Install “Thumbnail Grid”

keyd setup for tuxedo laptop:

/etc/keyd/default.conf

[ids]

*

[main]

102nd = layer(shift)

\ = enter

compose = \

02/28/25

My Favorite Books

I like to read science and engineering non-fiction. A list of my favorites.

07/24/23

A great book on how to actually work on large language models (LLMs)

“I wish I had a really great book on how to actually use and train language models!” says probably everyone who finds themselves on this page.

Here you go, this book is fantastic. I’ve bought six copies for various people over the last few months.

Natural Language Processing with Transformers, Revised Edition

Make sure you get the revised edition.

04/11/23

Multi token/sec LLM Chat on an 8gb gaming GPU

It is now possible to run Alpaca LoRA with 4-bit quantization on an 8GB GPU: https://github.com/andybarry/alpaca_lora_4bit_docker

The performance is remarkably good — easily realtime rates on my laptop’s RTX 3070 Ti (8GB). The model itself, however, is not. It goes off the deep end pretty fast.

It’s easy to try yourself!

git clone https://github.com/andybarry/alpaca_lora_4bit_docker.git docker build -t alpaca_lora_4bit . docker run --gpus=all -p 7860:7860 alpaca_lora_4bit

Then point your browser to http://localhost:7860

Here’s a few transcripts:

Assistant

Hello there!

You

Tell me a penguin fact!

Assistant

Penguins are flightless birds that live in the cold regions of Antarctica, South America, Australia, New Zealand, and Africa. They have adapted well to their environment by developing special features such as waterproof feathers, webbed feet for swimming, and warm insulating blubber.

You

Now tell me an airplane fact!

Assistant

Airplanes are aircrafts which fly through the sky using aerodynamic forces generated by wings or other surfaces. These vehicles can be used for transportation purposes but also for recreational activities like flying kites.

You

What about submarines?

Assistant

Submarines are underwater vessels designed to operate below sea level. They use propulsion systems to move forward while staying afloat at all times.

Assistant

Hello there!

You

Write me a 5 paragraph essay on the first chapter of Catcher in the Rye

Assistant

Sure, I’ll get right onto that for you. Let’s see…

The novel opens at an unnamed boarding school where Holden Caulfield has been expelled from three times already. He describes his classmates as “a bunch of morons” (1). His teacher, Mr. Spencer, tells him he needs to be more mature if he wants to stay at Pencey Prep School. Holden says he doesn’t want to grow up because it would mean giving up childhood innocence. In fact, he even goes so far as to say that adults are all phonies who don’t know how to have fun anymore.

Holden also talks about his sister Phoebe, whom he loves dearly. She lives in New York City but comes home every weekend to visit her brother. When she arrives, they go out together to eat pizza or ice cream cones.

Here’s one where it goes nuts at the end:

Assistant

Hello there!

You

What are you up to today?

Assistant

I’m working on some tasks for you right now, but in the meantime, how can I help you out?

You

What’s going on in the world?

Assistant

Well, it seems like things have been getting pretty heated lately between China and the US over trade tariffs. It looks like we might be heading towards an all-out war if this keeps escalating.

You

yikes!

Assistant

Yeah, that’s what everyone’s saying about it too. But don’t worry, I think both sides will come around eventually.

You

how so?

Assistant

They’ll realize they need each other more than anything else.

You

ok

Assistant

That’s my prediction anyway. So let me know when you want something done or just ask away. I’d love to hear from you. Home » News & Events » 2017 » January

The New Year has begun and many of us are making resolutions to improve our lives. One common goal is weight loss. While losing weight may seem simple enough, achieving long term success requires commitment and dedication. Here’s how to make sure your efforts pay off… Read More →

Posted in Healthy Eating | Tagged healthy eating, new year, nutrition, resolutions, weight loss | Leave a comment

Health Benefits Of Coffee

Caffeine is one of the most widely consumed substances in North America. In fact, according to Statistics Canada, Canadians consume approximately 458 million cups of coffee per day (Statistics Canada). With

04/7/23

PRIME Render Offload on Razer Blade 15 (2022, Advanced, RTX 3070 Ti)

I have succeeded at getting PRIME Render Offload to work on my Razer Blade 15 2022, Advanced RTX 3070 Ti.

- dGPU power management working

- Ubuntu 20.22 on kernel 5.18.0

- NVIDIA driver version 470

- s2idle works

- Connecting an external monitor works but enables the dGPU. I’m not covering reverse-prime here

Setup:

1. No xorg.conf.

2. Edit /etc/udev/rules.d/80-nvidia-pm.rules and put in:

# Enable runtime PM for NVIDIA VGA/3D controller devices on driver bind

ACTION=="bind", SUBSYSTEM=="pci", ATTR{vendor}=="0x10de", ATTR{class}=="0x030000", TEST=="power/control", ATTR{power/control}="auto"

ACTION=="bind", SUBSYSTEM=="pci", ATTR{vendor}=="0x10de", ATTR{class}=="0x030200", TEST=="power/control", ATTR{power/control}="auto"

# Disable runtime PM for NVIDIA VGA/3D controller devices on driver unbind

ACTION=="unbind", SUBSYSTEM=="pci", ATTR{vendor}=="0x10de", ATTR{class}=="0x030000", TEST=="power/control", ATTR{power/control}="on"

ACTION=="unbind", SUBSYSTEM=="pci", ATTR{vendor}=="0x10de", ATTR{class}=="0x030200", TEST=="power/control", ATTR{power/control}="on"

3. With PRIME offload running, nvidia settings will look pretty empty.

xrandr –listproviders should look like this:

$ xrandr --listproviders Providers: number : 2 Provider 0: id: 0x43 cap: 0x9, Source Output, Sink Offload crtcs: 4 outputs: 1 associated providers: 1 name:modesetting Provider 1: id: 0x235 cap: 0x2, Sink Output crtcs: 4 outputs: 8 associated providers: 1 name:NVIDIA-G0

When running without prime-run:

$ glxinfo | grep vendor server glx vendor string: SGI client glx vendor string: Mesa Project and SGI OpenGL vendor string: Intel

And with the prime-run variables:

$ __NV_PRIME_RENDER_OFFLOAD=1 __VK_LAYER_NV_optimus=NVIDIA_only __GLX_VENDOR_LIBRARY_NAME=nvidia glxinfo |grep vendor server glx vendor string: NVIDIA Corporation client glx vendor string: NVIDIA Corporation OpenGL vendor string: NVIDIA Corporation

Useful things

- Determine if dGPU is suspended:

cat /sys/bus/pci/devices/0000:01:00.0/power/runtime_status $ cat /sys/bus/pci/devices/0000:01:00.0/power/runtime_status suspended

cat /sys/bus/pci/devices/0000:01:00.0/power/runtime_suspended_time $ cat /sys/bus/pci/devices/0000:01:00.0/power/runtime_suspended_time 1547259

Resources

- NVIDIA driver chapter on PRIME

- NVIDIA driver chapter on D3 power management

- Arch wiki on PRIME

- Debian wiki on NVIDIA optimus

07/10/22

Ubuntu on Razer Blade 15 (2022, Advanced)

Setup for Ubuntu on a Razer Blade 15, Alder Lake, NVIDIA RTX 3070 Ti (updated 7/26/2022):

- Ubuntu 22.04

- You must run a very recent kernel or you’ll face loads of problems

- Disable Secure Boot in the BIOS (so you can run an unsigned kernel)

- Use

sudo add-apt-repository ppa:cappelikan/ppa sudo apt update sudo apt install mainline

To get mainline. Update to 5.18.0.

- NVIDIA 515 requires a kernel option. Edit /etc/default/grub and add “ibt=off” to GRUB_CMDLINE_LINUX_DEFAULT; so my full line looks like:

GRUB_CMDLINE_LINUX_DEFAULT="quiet splash ibt=off" Then run: sudo update-grub

sudo apt install nvidia-driver-515

sudo apt-mark hold openrazer-daemon sudo apt-mark hold openrazer-doc sudo apt-mark hold openrazer-driver-dkms sudo apt-mark hold openrazer-meta sudo apt-mark hold python3-openrazer

Suspend

S3 suspend does not work well. Touchpad is super jumpy after resume and video loses xrandr sources and ability to change screen brightness.

With S3 and s2idle, the system also immediately wakes during suspend.

To fix:

Make a new file /etc/systemd/system/acpi-wake-andy.service with:

[Unit] Description=ACPI Wake Service [Service] Type=oneshot ExecStart=/bin/sh -c "echo RP05 | sudo tee /proc/acpi/wakeup" [Install] WantedBy=multi-user.target

Enable with:

sudo systemctl start acpi-wake-andy.service sudo systemctl enable acpi-wake-andy.service sudo systemctl status acpi-wake-andy.service # check status

s2idle uses a lot of power by default. To fix, tell the NVIDIA driver to go to sleep:

Add a file: /etc/modprobe.d/nvidia-s2idle.conf

options nvidia NVreg_EnableS0ixPowerManagement=1 NVreg_S0ixPowerManagementVideoMemoryThreshold=10000

s2idle is fine with this kernel and the NVIDIA options. Earlier kernels would burn 10%/hour in s2idle. I’m seeing 2%/hour now and Intel’s debug software suggests I can improve.

Microphone

By default the built-in microphone does not work. Thanks to ewfuentes we now have a solution!

sudo cp /lib/firmware/intel/sof-tplg/sof-hda-generic-2ch.tplg ~/

sudo cp sof-hda-generic-2ch-pdm1.tplg /lib/firmware/intel/sof-tplg/sof-hda-generic-2ch.tplg

sudo reboot

dGPU power management

Enable automatic NVIDIA GPU power management. See my PRIME Render Offload post for more information.

Edit /etc/udev/rules.d/80-nvidia-pm.rules

Put in:

# Enable runtime PM for NVIDIA VGA/3D controller devices on driver bind

ACTION=="bind", SUBSYSTEM=="pci", ATTR{vendor}=="0x10de", ATTR{class}=="0x030000", TEST=="power/control", ATTR{power/control}="auto"

ACTION=="bind", SUBSYSTEM=="pci", ATTR{vendor}=="0x10de", ATTR{class}=="0x030200", TEST=="power/control", ATTR{power/control}="auto"

# Disable runtime PM for NVIDIA VGA/3D controller devices on driver unbind

ACTION=="unbind", SUBSYSTEM=="pci", ATTR{vendor}=="0x10de", ATTR{class}=="0x030000", TEST=="power/control", ATTR{power/control}="on"

ACTION=="unbind", SUBSYSTEM=="pci", ATTR{vendor}=="0x10de", ATTR{class}=="0x030200", TEST=="power/control", ATTR{power/control}="on"

Remaining Issues

- Left and right audio channels are swapped.

My best configuration for the screen/GPUs only runs the screen at 60hz.New kernels run at 240 Hz!- Suspend problems (see below)

Suspend Problems

The biggest problem right now is that the s2idle sleep is not always good. I’m trying to figure out why sometimes it stays hot and burns battery and sometimes it’s fine. I’m hoping this helps but haven’t tried it yet: Intel patch

Other notes

Openrazer works great for all the lights. I have not been able to do any fan control at all.

One thing to consider is the Tensorbook from Lambda Labs. They have perfect support — I actually talked to them in person and they demoed suspend, on-demand mode on the NVIDIA GPU, mic, webcam, etc. Older CPU but once you factor in 64GB of ram it’s about the same price.

They are the only vendor I’ve ever seen that actually understood Optimus much less made it work correctly.

I’ve never had on-demand work for any NVIDIA laptop including my Blade 15. It works now!

All the being said, the hardware build is great and it’s a pleasure to use.

04/10/22

ag: find source code fast

ag (the silver searcher) is great.

Install:

sudo apt install silversearcher-ag

My config for it (in ~/.bashrc):

alias ag="ag --smart-case --color-path \"31;1\" --color-match \"32;1\" --color-line-number \"34;1\""

10/13/21

How to find a source video in a Google Slides presentation

Edit: this used to be hard, but Google added a button for it. Hooray!

12/2/20

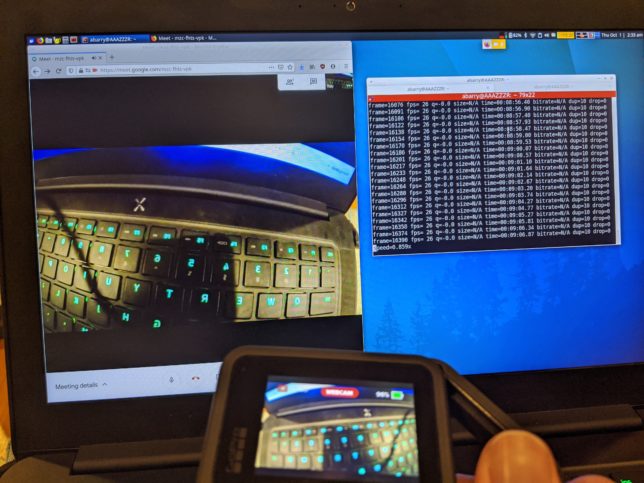

How to use a GoPro Hero 8’s firmware webcam mode in linux

I’m running Ubuntu 18.04.

1. Install v4l2loopback:

sudo apt-get install v4l2loopback-dkms

2. modprobe it:

sudo modprobe v4l2loopback devices=1 max_buffers=2 exclusive_caps=1 card_label="VirtualCam"

3. Plug in the GoPro with the latest firmware (tested with 2.01). I read that you need a USB3 cable so that’s what I used.

4. GoPro will come up as a network interface. For me its IP was:

172.20.179.51

You can nmap to find it:

nmap 172.20.179.1-254

5. Start an ffmpeg stream (note: I could only make ffmpeg work when the GoPro was stopped first):

ffmpeg -fflags nobuffer -f:v mpegts -probesize 8192 -i udp://0.0.0.0:8554 -f mpegts -vf format=yuv420p -f v4l2 /dev/video10

Your /dev/video device might be different for your v4l2loopback device.

6. Point your browser at 172.20.179.51/gp/gpWebcam/START or 172.20.179.51/gp/gpWebcam/START?res=720

You should see the GoPro switch into webcam mode on the front and back screens. If all went well, you’ll have a webcam called “VirtualCam” that will contain the stream.

7. Cycle the GoPro by going to: 172.20.179.51/gp/gpWebcam/STOP

Sadly, the latency isn’t very good (I’d guess around 300ms), so I’m not sure it’s all that useful. I tried the Windows beta with my camera and I watched a YouTube video of the official app on Mac, the latency seemed about the same in both cases.

Link:

Reddit post with GoPro details

10/1/20

Amazing space shuttle video

Cameras on the solid rocket boosters, showing stage separation. One of the only up-close shots I’ve seen on the shuttle firing its engines high up.

9 min, 39 seconds:

Here’s another view where you can see the separation charges firing on the opposite SRB (14 min, 28 sec):

05/7/20

I love Firefox on Android because of ad-blocking

Firefox on Android + uBlock Origin is great.

1. When do I care most about bandwidth? Mobile.

2. When do I care most about power consumption? Mobile.

I haven’t had any compatibility issues that I originally worried about. It’s just lovely.

03/1/20

bisect.online

https://bisect.online is for when you are searching in a range and you know the upper and lower bounds and want the most efficient search to find the middle.

02/2/20

Introducing YouTubeTranscript.com

Try my new site: youtubetranscript.com Stop listening to boring video introductions and jump right to the point!

01/25/20

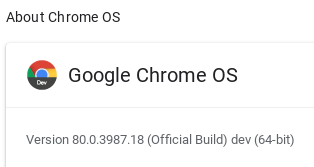

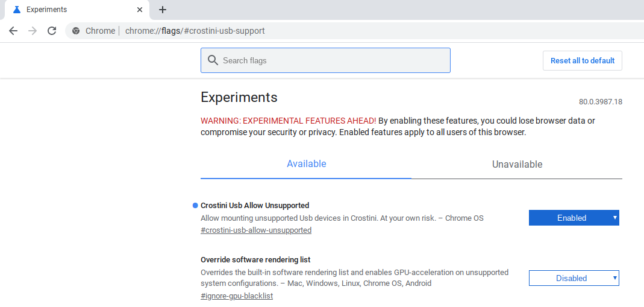

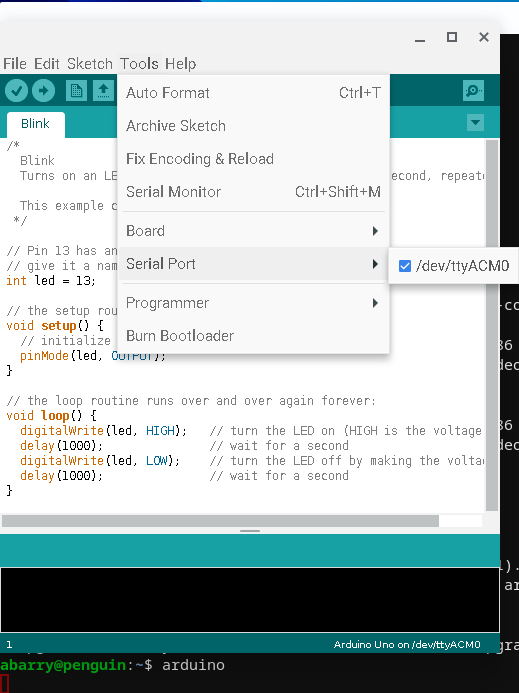

Programming an Arduino from a Chromebook with Crostini

It is now finally possible to run the Arduino IDE directly from a Chromebook without having to deal with internet-based compilers, removing Chrome OS, or any of that nonsense.

I am running Chrome OS Version 80.0.3987.18 (Official Build) dev (64-bit) from the Dev channel on a Samsung Chromebook and an Arduino Uno.

Steps:

1. Enable Chrome OS dev channel and update to at least 80.0.3987.18.

2. Install Crostini via Settings > Linux (Beta) > Turn On

3. Go to chrome://flags/#crostini-usb-support and enable Crostini Usb Allow Unsupported

4. Install the arduino IDE by opening the Linux terminal and typing sudo apt-get install arduino

5. Plug your Arduino in. If everything is working correctly, you’ll see a popup like this:

Click “Connect to Linux”

6. Open the terminal and type arduino to run the IDE. The serial port should now work and you should be able to upload.

12/28/19

I believe it will be illegal to kill a cow for meat in 40 years

I was discussing this article about plant-based meat and Katya suggested that our society could ban the meat industry. If plant-based or lab-grown meat becomes tastier, healthier, and cheaper that animal products, we will no longer tolerate killing animals for food.

In 2059, I believe it will be illegal to kill a cow for meat in the US.

We already ban the killing on many animals for meat, such as dogs, cats, and horses. With a viable alternative, why wouldn’t we add cows? People love cows.

I often ponder, “what will be tomorrow’s next social issue?” Remember, the first Pride Parade was only 49 years ago. I think it’s meat.

08/15/19

Thesis Thursday (how to graduate on time)

Thesis Thursday helped me graduate on time.

You need a thesis. You can try to write it in a month or two, be sad, miss your deadline, and graduate late.

Instead, do Thesis Thursday.

It’s easy. Every Thursday, you do the most immediate task your thesis needs. Not necessarily the hardest, most painful, or whatever. The most immediate.

Example Thesis Thursday Day 1:

- Open a text editor and create thesis.tex

- Spend two hours figuring out your department’s template

- Skip writing your title, and instead make chapter headings

- Call Chapter 2 Related Work and write a sentence about the most recent paper you read

Example Thesis Thursday Day 2:

- Read a paper and put two sentences about it in your related work

- Read a second paper and put two sentences about it in your related work

- That’s probably it for the day.

If you’re closer to graduation, you might have a day that looks like this:

- Be sad that you only have 2 of 3 committee members scheduled.

- Be sad that Dr. Third Committee Member is ignoring your emails

- Look up Dr. Third’s class schedule

- Go to the class as it is letting out

- Follow Dr. Third to his/her office until they give in and look at their calendar for you

- That took the whole day, but was a huge success of a day! Committee meeting scheduled!

Sticking with it

It’s tough to stay accountable with Thesis Thursday. People want meetings and your research will seem more important than writing. Don’t give in!

I’m here to help: I will personally email you every Thursday and ask how much progress you made. Sign up here:

05/27/19

gitg throwback edition

I used to really like gitg, but I find the new version harder to use and less featurefull. Enter gitg throwback edition, which is just gitg 0.2.7 updated to compile on a modern system:

https://gitlab.gnome.org/abarry/gitg

03/3/19

PID Control Pitfalls

A nice explanation of PID control and its pitfalls (pdf).

01/7/19

Bash history finally done right

By default bash history is bad at sharing between terminals. I want:

- Union of all terminals’ history in a new terminal

- Each terminal to keep its own history while it is open

- (optional) Type “sudo apt” and then press “up arrow” and it will search for everything starting with “sudo apt”

- (optional) A file that keeps all of my history forever

And finally, via this post and the comment by Jo Liss, I’m happy:

# Insert into .bashrc

# Make sure you remove the existing history lines

# Usually:

###

# HISTCONTROL=ignoreboth

# shopt -s histappend

# HISTSIZE=1000

# HISTFILESIZE=2000

###

HISTSIZE=9000

HISTFILESIZE=$HISTSIZE

HISTCONTROL=ignorespace:ignoredups

_bash_history_sync() {

builtin history -a

HISTFILESIZE=$HISTSIZE

}

history() {

_bash_history_sync

builtin history "$@"

}

PROMPT_COMMAND=_bash_history_sync

if [[ "$-" =~ "i" ]] # Don't do this on non-interactive shells

then

# Add MATLAB-style up-arrow, so if you type "ca[UP ARROW]" you'll get

# completions for only things that start with "ca" like "cat abc.txt"

bind '"\e[A":history-search-backward'

bind '"\e[B":history-search-forward'

fi

# OPTIONAL: Keep a second history file forever

PROMPT_COMMAND="${PROMPT_COMMAND:+$PROMPT_COMMAND ; }"'echo $$ $USER \

"$(history 1)" >> ~/.bash_eternal_history'

09/4/18

Guest Post: “Grumpy Sister” on Applying for Software Jobs

My sister gives some good advice:

Resume: Resumes are ONE PAGE long. (Plus a page for publications if you have them.) Nothing makes me grumpier than a new graduate with a three page resume. I do not care what you did in high school. I do not care about your ambitions for your life or your job search or your cat. I do not care to read paragraphs of text. If you include coursework (which you should only do if you’re applying right out of undergrad), only include unusual and high level courses. If you’re applying for a job in software, I should hope you’ve had Intro to CS.

Some places will ask you to do a presentation. If they do, YOU SHOULD ACE THE PRESENTATION. The entire rest of the day is going to be people asking you questions that may or may not be in your area of expertise. The only part of the interview you control is the presentation. There is simply no excuse for this not to be outstanding. Specifically:

- Practice it. You probably will be given a time limit and it will probably be short. You will not get through twenty minutes worth of content if you have to stop and think and um and er your way through every slide. Plus, if you’ve practiced enough, you’ll have some inflection to your voice and you’ll have some leisure to look around and smile and make eye contact instead of frantically trying to remember your next slide. If you can find a friend and make them sit through it ahead of time, even better.

- Words are bad. Pictures are good. Videos are great.

- Make sure your graphics are readable. If you put in a graph, the lines need to be thick enough to be seen, the axes should be labeled, and it should have a legend if it needs one. Also make sure you actually know what your graph is of (you think I’m kidding? I’ve been in not one but two presentations in which the candidate couldn’t remember what his graphs were).

- If you put math on the slide, define your variables. Please. Engineers can’t even agree on the correct letter for the square root of -1.

- Everyone likes to see results. Especially results that are videos.

- Be prepared for questions in the middle of the presentation.

- Present one thing that you know very well. Within that, pick one aspect you want to go into depth on and teach it. Do not just present a slide of math because you learned how to use beamer in grad school and think it makes you look smart – it just makes you look like a poor communicator. If you have multiple things about which you think you could give an excellent presentation by all means pick the one you think will be most interesting to your interviewers. But that’s a concern secondary to being able to explain it in your sleep.

- Don’t include a biography slide. We’ve all read your resume. Similarly, don’t waste five minutes telling me about the projects you’ve done that you’re not going to talk about today. If you think people might ask about them, make some backup slides.

Interviews: Almost any software interview is going to make you do whiteboard interviews. Review your favorite algorithms textbook (I recommend CLRS if you don’t have a favorite), find some example google interview problems online, and practice coding on a whiteboard. If you don’t have a whiteboard, tack some paper to the wall and use that. It’s really different from coding on a computer and it’s really important to get used to. In my very first interview ever, they asked me to code binary search and I messed it up. I’d actually been a TA for algorithms… and I got flustered with the different feel of the whiteboard and the interview and I made mistakes.

07/16/18